Introduction to DIMM and Its Relevance in Computing

The Dual Inline Memory Module, or DIMM, stands as a critical pillar in the realm of computing. Its primary role is to house the Random Access Memory (RAM) chips that are vital for the swift and efficient processing capabilities of modern computers. DIMM’s evolution has come a long way from its predecessors, offering a broader data path and increased speed. Unlike its forerunner, the Single Inline Memory Module (SIMM), DIMM modules are designed with separate pins on each side of the module, which effectively doubles the available data pathway to 64 bits.

With the advancement in processor technology, the shift to DIMM has become essential. Today’s processors are built to handle 64-bit data widths, and DIMMs are tailored to meet this requirement. Enabling faster data access and improved system performance. The journey from the older 32-bit SIMMs to today’s DIMMs has marked a significant leap in memory module design. Laying the groundwork for the complex tasks that computers can now handle with ease.

The improvement in DIMM technology has been continual and is driven by the ever-increasing demands for higher speed and capacity in computing devices. A DIMM allows for a straightforward and reliable connection to a computer’s motherboard. serving as a fundamental component in desktops, laptops, and even printers. As the backbone of a system’s memory structure, the impact of DIMM technology is far-reaching. influencing the overall efficiency and capability of computing technology.

In essence, DIMMs form an indispensable part of the computer’s architecture, enabling the machine to run applications smoothly, manage multiple tasks simultaneously. And maintain high performance levels. As the digital landscape progresses, the relevance and contribution of DIMM in computing continue to grow, mirroring the need for faster, more resilient. And more powerful computing solutions.

Historical Progression from SIMMs to DIMMs

To appreciate the technological advancements embodied by Dual Inline Memory Modules (DIMMs), it’s crucial to revisit the historical migration from Single Inline Memory Modules (SIMMs) to DIMMs. Originally, computers utilized SIMMs, which provided a narrower 32-bit data pathway served by connectors on only one side of the module. This configuration demanded that SIMMs be installed in pairs to facilitate effective data management in 64-bit processing environments.

The shift to DIMMs represented a significant breakthrough in memory technology. Unlike SIMMs, DIMMs feature connectors on both sides of the module, enabling a direct 64-bit data path. This fundamental design change enabled a single DIMM to independently handle what previously required two SIMMs, simplifying installation and enhancing data flow efficiency. Notably, the initial DIMM variants, primarily using 168 pins, marked a decisive response to the higher performance demands commanded by evolving computing needs.

The progression from SIMMs to DIMMs wasn’t just a leap in pin counts or physical design; it represented a broader shift towards systems designed to support faster and more robust memory architectures. This evolution laid the groundwork for subsequent memory modules to address increased data rates and throughput, paving the way for modern high-capacity, high-speed computing applications. Thus, the transformation from SIMMs to DIMMs is a narrative about incremental refinement and a drive towards optimization in computer memory technology, mirroring the broader trends in computing advancements.

Technical Specifications of DIMM: Understanding Pins and Data Transfer

A DIMM’s technical specifications are crucial for its compatibility and performance. These specifications include the number of pins and the data transfer capabilities, both of which have evolved over time. The transition from SIMMs to DIMMs saw an increase in pin count, which allowed for a wider data path and, therefore, faster data transfer rates.

DIMMs started with a 168-pin setup to support 64-bit data transfer, meeting the needs of early 64-bit processors. As processors and applications required more data throughput, DIMMs evolved. Modern DIMMs, like those based on DDR4 SDRAM technology, come with 288 pins, enabling an even greater flow of data to and from the memory chips.

The number of pins on a dual inline memory module is more than just a physical attribute; it determines how the module connects to the motherboard. It also influences the module’s capacity to send and receive data. For instance, older DDR DIMMs often had lesser pins as compared to the newer DDR4 DIMMs, which use 288-pin connectors.

The increased pin count on modern dual inline memory module goes hand in hand with improved data transfer speeds. The evolution of clock speeds in RAM chips has contributed to this progress, allowing a 64-bit pathway to manage greater quantities of data than ever before. By supporting faster clock rates, modern DIMMs can accommodate the quick transfer of large blocks of data, which is essential for high-performance computing tasks.

In summary, the technical specifications of DIMMs, particularly concerning pins and data transfer, are fundamental to their function. An increase in pin count corresponds with enhanced data pathways, leading to improved data transfer speeds that support the demands of modern computing.

The Various Types of DIMs and Their Uses

DIMMs have evolved into several types to meet various system requirements. Each type of DIMM has specific characteristics and applications, responding to the different needs of devices ranging from personal computers to high-capacity servers.

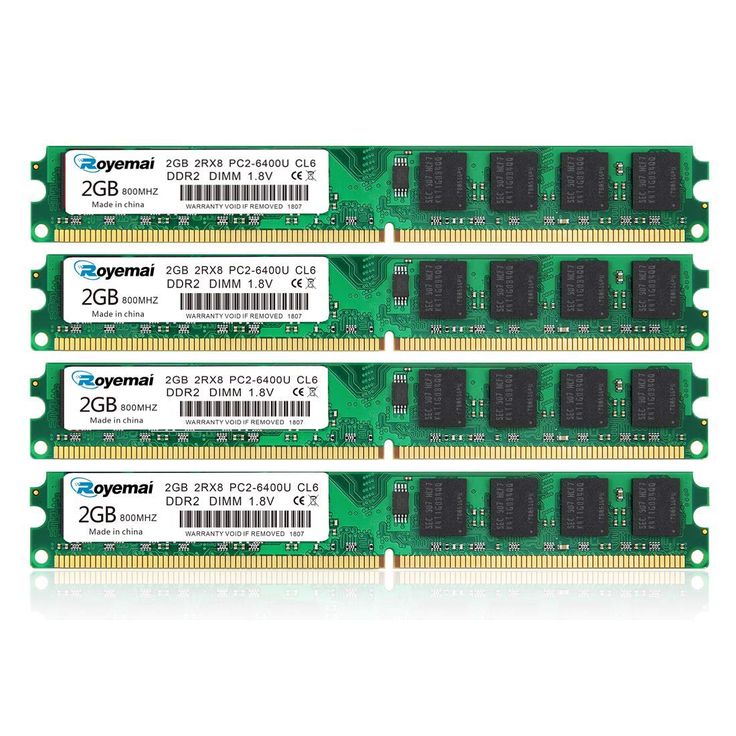

Unbuffered DIMM (UDIMM)

Unbuffered DIMMs, or UDIMMs, are commonly used in non-server applications, primarily in desktops and laptops. They offer faster speed due to direct communication between the memory controller and the memory itself. However, this direct interaction can strain the system’s memory controller if too many UDIMMs are used.

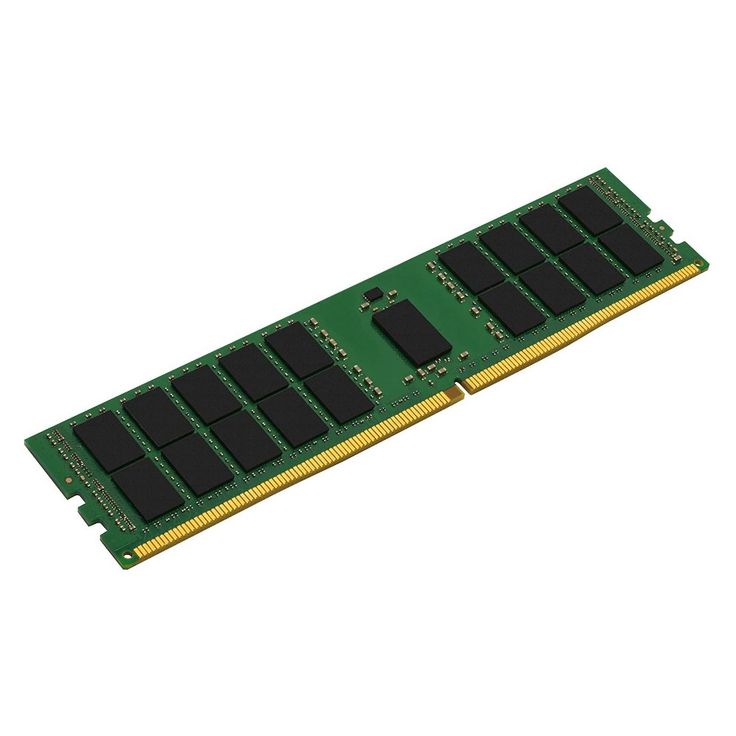

Registered DIMM (RDIMM)

RDIMMs include a register that holds data before it is transferred to the memory controller, enhancing stability and reliability. This feature makes RDIMMs ideal for server environments where data integrity is crucial, even though it introduces slight latency.

Fully-Buffered DIMM (FB-DIMM)

FB-DIMMs have advanced memory buffers that manage data flow between the memory controller and the RAM, improving error correction and signal integrity. These are typically used in high-performance computing and server applications that require high memory capacities and data reliability.

Load-Reduced DIMs (LR-DIMMs)

LR-DIMMs feature a buffer that reduces the load on the memory controller by isolating the electrical loading caused by the DIMMs. Suitable for servers with high-density RAM requirements, LR-DIMMs support larger memory capacities and enhance speed.

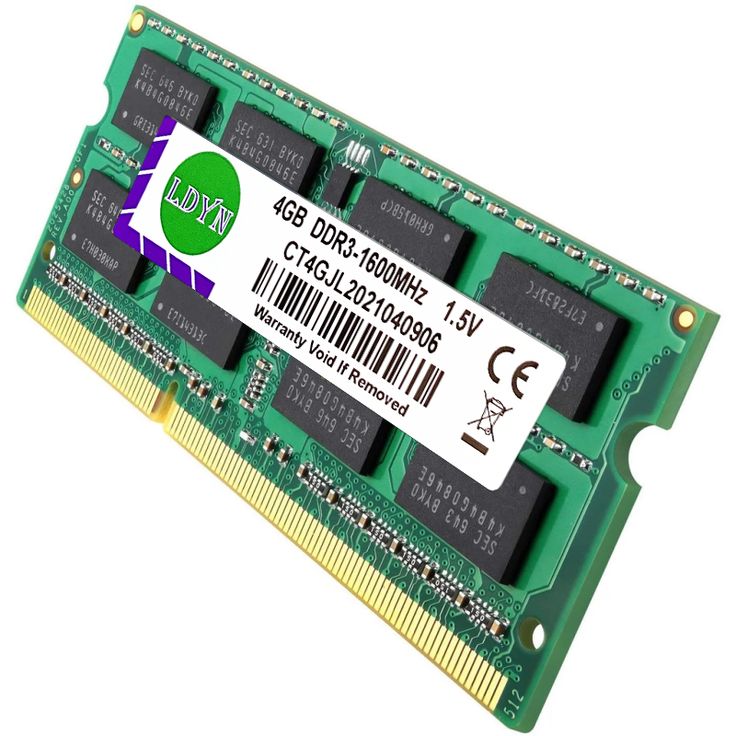

Small Outline DIMM (SO-DIMM)

SO-DIMMs are smaller and more compact than regular DIMMs, making them perfect for portable devices such as laptops and tablets. Despite their smaller size, SO-DIMMs maintain a functional integrity on par with their larger counterparts, suitable for devices with limited space.

The Role of Cooling Structures in DIMM Performance

In the realm of dual inline memory modules (DIMMs), cooling structures have become pivotal. These enhancements directly address the challenge posed by increased heat generated from denser and faster DIMMs. As memory technologies, like DDR4 and beyond, continue to progress, the heat produced from the amplified data rates rises. Efficient heat dissipation is therefore critical to maintain system stability and performance.

Cooling structures range from basic heat spreaders to more elaborate fins or heat sinks attached to the DIMM itself. These act as thermal conduits, drawing heat away from the memory chips and releasing it into the surrounding environment. This process helps regulate the DIMM’s temperature, preventing overheating that can lead to system failure or diminished performance.

Heat spreaders are metal plates that cover the face of the DIMM, often made from aluminum or copper for their excellent thermal conductivity. They provide a large surface area that distributes the heat evenly, allowing air or liquid cooling systems to remove the heat more effectively. Advanced cooling structures might include heat pipes or vapor chambers, which use the phase change of a fluid to transfer heat efficiently even in high-density memory configurations.

Importantly, the presence of such cooling solutions on DIMMs has enabled manufacturers to increase the memory capacity without compromising on clock speeds. Modern DIMMs can now come in capacities up to 64 GB or more, with some fitted with outright cooling apparatus to deal with the extra heat generated from such high capacities.

The adoption of cooling mechanisms in DIMM design illustrates a crucial consideration beyond just memory capacity or speed. It underscores the importance of thermal management in ensuring the longevity and reliability of computer memory, especially as users demand more from their systems both in terms of workload and continuous operation times.

Selecting the Right DIMM: Compatibility and Performance Factors

Choosing the correct dual inline memory module (DIMM) hinges on understanding its compatibility and performance factors. The motherboard’s specifications dictate the type and amount of DIMM it can support. When assessing DIMMs, consider factors like size, speed, and type to ensure seamless operation with your system.

Compatibility Considerations

When selecting a DIMM, ensure it matches the motherboard’s specifications. Check for the correct number of pins, as this affects the physical fit and electrical connectivity. The DIMM type, whether DDR, DDR2, DDR3, or DDR4, should align with the motherboard’s design. Using an incompatible DIMM can result in system failure or suboptimal performance.

Performance Factors

A DIMM’s performance is measured by its speed and latency. Speed signifies how fast data transfers between the DIMM and the processor. Latency describes the time it takes for a memory command to be executed. Lower latency equates to faster response times. Always aim for a balance between speed and latency to maximize your system’s efficiency.

DIMM Configuration

The alignment of DIMMs can affect multi-channel architecture, with matched pairs in dual channels enhancing performance. Avoid mixing DIMMs of different speeds or sizes as this can lead to system conflicts. For optimal results, utilize DIMMs with the same specifications.

Future-Proofing

Think ahead when choosing DIMMs. Opt for higher speed capacities if you plan to upgrade your system. While current applications may not require the fastest DIMM, having headroom allows for future expansion without the need for immediate replacement.

Choosing the right DIMM goes beyond just fitting into the slot. It’s about understanding how it cooperates with your system to boost its performance and reliability. Assess compatibility, weigh performance factors, and configure correctly to unlock your computer’s potential.

The Impact of DIMMs on Multi-Channel Architecture and Memory Latency

The introduction of Dual Inline Memory Modules (DIMMs) has played a pivotal role in advancing computer memory architecture, particularly in the context of multi-channel configurations and their effect on memory latency. Multi-channel architecture allows a system to increase its data throughput by providing more than one data path between the memory and the memory controller. This results in improved overall system performance, as multiple data channels enable simultaneous data processing, effectively doubling or tripling the data transfer rate compared to a single-channel setup.

DIMMs are essential in multi-channel setups because they provide the physical interface necessary for this configuration. By strategically placing DIMMs in matched pairs (or trios/quads for triple and quad-channel architectures), users can leverage the full advantage of this technology. It is critical to match DIMMs by capacity, speed, and size to ensure the system operates efficiently and without conflict. This arrangement correlates with notable improvements in bandwidth and a reduction in bottlenecks caused by data transfer constraints.

Moreover, DIMMs exert a significant influence on memory latency—the delay between a command being issued by the processor and the memory executing it. In a multi-channel setup, since DIMMs work in parallel, the effective latency can be decreased, as multiple requests can be serviced at the same time. However, the exact impact on latency can vary based on the memory controller’s efficiency and the specific tasks being executed.

To sum up, DIMMs are integral to the enhancement of multi-channel memory architectures. By enabling the parallel processing of data, they contribute significantly to reduced memory latency and increased data transfer rates, making them indispensable in the pursuit of higher-performing computing systems.

Future Trends and Developments in DIMM Technology

Looking ahead, dual inline memory module technology is set for exciting developments. With the relentless demand for faster and more capable computing systems, we anticipate further advancements in DIMM specifications. Future DIMMs will likely feature higher pin counts, which translates to greater data transfer capabilities and speeds.

Industry efforts focus on developing DIMMs with even higher capacities. We may soon see standard modules surpassing the current 64 GB limits commonly seen in DDR4 modules. This evolution is critical for data-intensive applications like large-scale databases and real-time analytics.

Moreover, advancements in error-correction and signal integrity are on the horizon. New ECC (Error-Correcting Code) capabilities will ensure that data reliability keeps pace with the growing capacities and speeds.

Innovations in cooling technologies will also be paramount. As DIMMs become denser and faster, effective heat dissipation methods must evolve. Expect to see more sophisticated cooling solutions, such as integrated liquid cooling, to manage the heat of future high-performance DIMMs.

Another trend is the shift towards lower voltage DIMMs. Reduced power consumption not only saves energy but also minimizes heat production. The industry is gradually moving away from the traditional 1.2V towards 1.1V or lower for DDR modules.

Finally, the industry is gearing towards the adoption of next-generation memory protocols such as DDR5. These new standards will push data transfer speeds even further and open new frontiers for what is possible in computing performance.

As we move forward, the dual inline memory module stands as a key enabler of computing evolution, shaping how we process and manage our ever-growing data pools.